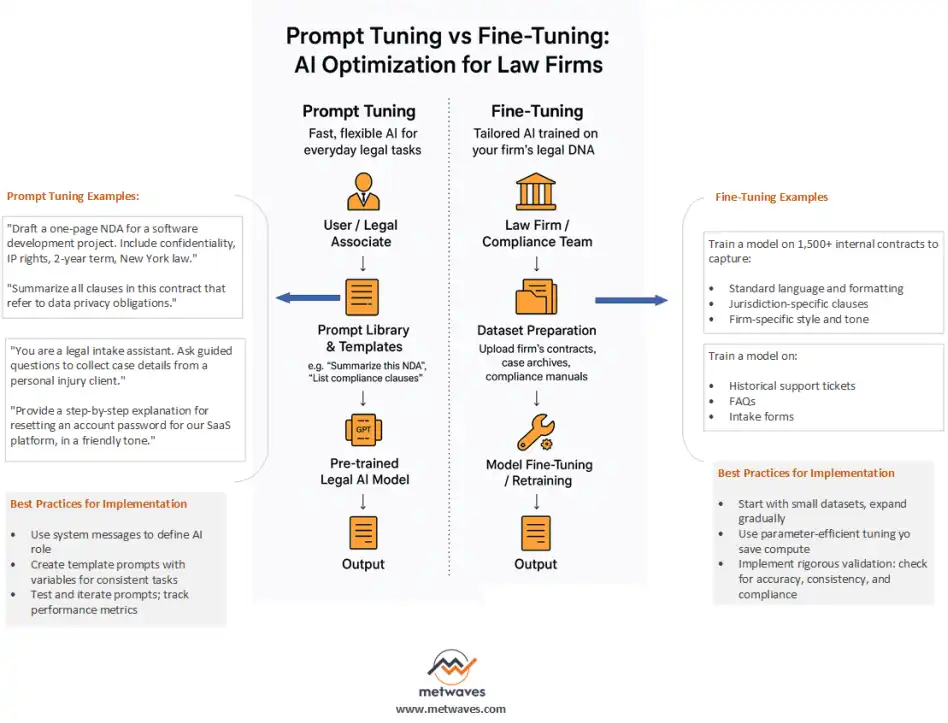

Law firms increasingly use AI to draft contracts, automate compliance checks, and power client-facing chatbots. Off-the-shelf LLMs can handle general language tasks, but legal work often demands domain-specific knowledge, consistency, and safety. To improve outputs, firms can prompt-tune (prompt engineering) or fine-tune their models. Fine-tuning adjusts a model’s internal weights via additional training on legal data creating a specialized model.

By contrast, prompt tuning/engineering keeps the base model frozen and instead customizes the input prompts (manually or via “soft” prompt vectors) to steer the model’s output.

For example, Dextralabs describes prompt tuning as adding a small set of learnable prompt vectors ahead of each input; only these vectors are trained on legal examples, while the core model remains unchanged. In practice this means prompt tuning requires far less compute and data than full fine-tuning, yet can still yield noticeable improvements.

Fine-tuning is more resource-intensive but deeply engrains domain knowledge. Fine-tuning adapts a pretrained model to a specific task with a smaller specialized dataset.

In a fine-tuned law model, every hidden layer can change to absorb legal terminology or firm-specific style. Dextralabs highlights that fine-tuning is advisable for regulated fields: it gives “a deep understanding of certain vocabulary, formats, and compliance standards” that generic LLMs lack.

A law firm might fine-tune an LLM on thousands of past contracts, pleadings, and memoranda so it learns the firm’s standards. In return it gets high consistency and specialization: a fine-tuned model will behave reliably across similar inputs, whereas a prompt-based model may vary more. Indeed, for mission-critical legal tasks—where any hallucination or inconsistency is unacceptable—fine-tuning often yields the safest output.

Cost-Benefit Comparison

Real-world numbers highlight the gap: OpenAI’s pricing shows GPT-4 fine-tuning costs $25 per million tokens of training plus $3/$12 per million tokens for inference. Base GPT-4 queries (no fine-tune) cost ~$0.03/$0.06 per million tokens. Thus a heavily fine-tuned model can be orders of magnitude more expensive to train and run. Conversely, a well-designed prompt workflow can often be implemented at essentially the same cost as using the base model (just token charges for each call, plus engineering time).

Law Firm Use Cases

1. Contract Drafting and Review

Legal drafting is a prime use for AI. Firms can instruct the model (via prompts or fine-tuned training) to generate first drafts of contracts, NDAs, regulatory filings, motions, etc. For example law firms now use AI to produce first drafts of contracts, motions, and letters in minutes, drawing on templates and language from past matters.

With prompt engineering, a lawyer might provide a detailed natural-language template prompt like “Draft an NDA between Company A and Company B, with clauses on confidentiality, term of 3 years, governing law New York” and refine the prompt iteratively. This can quickly yield usable drafts for editing. Soft prompt tuning could further improve this by inserting learned vectors that bias outputs toward the firm’s preferred style or clause.

- NDA and Contract Templates: AI generates a base draft, then lawyers review. Prompt tuning can add firm-specific context (e.g. “prioritize data privacy”).

- Regulatory Filings (e.g. SEC filings, licenses): Complex documents where missteps are costly. Fine-tuning on past filings can ensure correct format and up-to-date legal requirements.

- Routine Clause Extraction: Prompt engineering can ask the model to “list key terms” or “summarize changes” which aids in redlines and version comparisons.

2. Compliance Workflows

Law firms often manage internal policies and compliance documents. AI can assist in drafting and updating policy manuals, compliance checklists, and training materials. For example, when regulations change, a firm could use AI to scan contracts or policies for outdated clauses, as LexWorkplace suggests. A prompt like “Identify any references to the [old regulation] in these documents” can highlight issues. For deeper integration, a fine-tuned model trained on historical compliance data could automatically flag risky language or suggest precise alternatives. Firms might also use AI to generate internal reports summarizing compliance status, or to compose standard notices. Here accuracy and up-to-date knowledge are critical, so fine-tuning on validated sources (or combining with retrieval) ensures that AI advice aligns with the firm’s protocols.

- Automated Policy Updates: AI flags clauses in internal documents that conflict with new laws or firm standards

- Reviewing Precedents and Citations: Law firms can deploy specialized models to “cite-check” briefs. Harvey’s custom case-law model, for example, achieved an 83% jump in factual accuracy and was preferred by lawyers 97% of the time. A fine-tuned model or RAG system can provide similar cite-checks for firm memos.

- Internal Q&A and Summaries: For employee training or risk assessments, prompt-based chatbots can answer questions about the firm’s compliance policies, leveraging prompts that encode policy context. Soft prompt tuning can help the model learn the tone and emphasis needed for internal guidance.

3. Client-Facing Chatbots and Intake

AI chatbots are increasingly used to handle basic client queries and intake. An AI assistant on a law firm website might answer common questions (e.g. “What is the statute of limitations for a slip-and-fall claim in California?”) or gather initial case details via chat. Prompt engineering is key here: one might design robust chat prompts (e.g. system messages like “You are a legal intake assistant for personal injury cases”) and use LangChain or similar frameworks to manage conversation flow. AI chatbots can provide 24/7 availability for client intake, instantly collecting case facts and scheduling consults. For example, a prompt-based bot can ask guided questions (“When did the incident occur?”) and parse the answers.

- Client Intake Bots: 24/7 chatbots that ask initial questions and collect leadseve.legal. PromptChain tools like LangChain can structure multi-turn prompts (e.g. system/user messages) to simulate an interview.

- Basic Legal Advice Assistant: For simple common questions (not legal advice per se, but factual info), prompts that reference a knowledge base ensure relevant responses. Fine-tuning on approved answer pairs can increase accuracy.

- Customer Support Chatbots: Beyond intake, firms might use chatbots to give status updates (“Where is my document in the signing process?”) or explain billing. These rely heavily on prompt engineering with backend integrations, while fine-tuning can help the model reflect firm-specific policies.

Key Takeaway

In the evolving landscape of AI automation—especially within law firms and enterprise customer support—choosing between prompt tuning and fine-tuning is not just a technical decision; it’s a strategic one.

-

Prompt tuning offers speed, flexibility, and lower cost, making it ideal for dynamically changing text environments like legal research assistants, compliance monitoring bots, or customer service chatbots.

-

Fine-tuning, while more resource-intensive, delivers domain precision and context mastery, perfect for organizations dealing with proprietary legal frameworks, complex contracts, or multilingual compliance needs.

When combined strategically, both techniques form a hybrid approach—leveraging prompt templates for rapid iteration while reserving fine-tuning for high-stakes, high-accuracy models.

How Metwaves can help with AI Integration

At Metwaves, we specialize in helping enterprises harness the full potential of AI through tailored prompt engineering and fine-tuning strategies that align with your business goals.

- Custom AI Development – Tailored AI agents that meet specific business needs.

- Integration with Existing Systems – Seamless deployment into current software ecosystems.

- Scalability & Security – AI models built for reliability and data protection.

- End-to-End AI Solutions – From research to deployment and ongoing support.

Our expertise includes:

Prompt tuning is your first line of efficiency.

Fine-tuning is your key to excellence.

Metwaves is your partner in making both work together—scalable, compliant, and strategically aligned with your organizational goals.